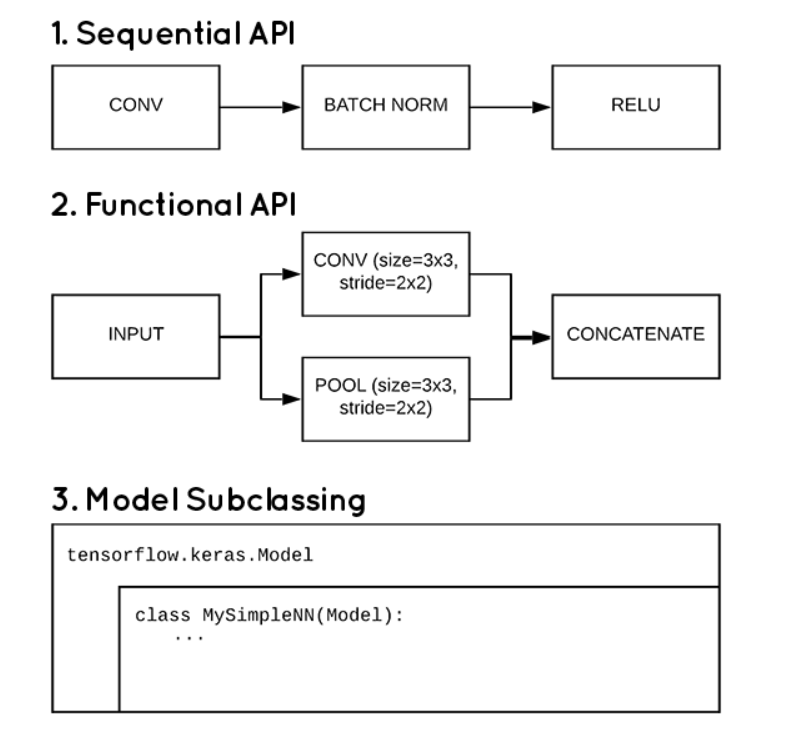

3种用TensorFlow 2.0构建Keras模型的方法

这三种方法分别是序列模型,函数模型,子类化模型。

在回忆tensorflow构建深度模型的代码时,发现Keras有许多种构建模型的方法,这里查阅资料稍作整理。主要参考的是这篇,里面有这篇文章中的很多完整代码。

模型整体分类如下:

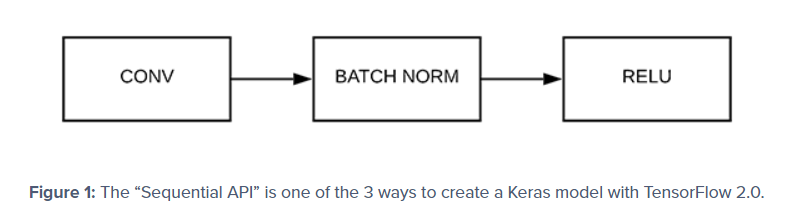

序列模型(Sequential API)

这种模型主要是一层一层的构建,它是最简单的方法,就如同CONV-->Batch Normalization-->ReLU-->POOLING。模型中没有分支,单输入,单输出。

通常比较容易就可以用Sequential API构建的模型有:

- LeNet

- AlexNet

- VGGNet

下面是Sequential model的一个简单例子:

def shallownet_sequential(width, height, depth, classes):

# initialize the model along with the input shape to be

# "channels last" ordering

model = Sequential()

inputShape = (height, width, depth)

# define the first (and only) CONV => RELU layer

model.add(Conv2D(32, (3, 3), padding="same",

input_shape=inputShape))

model.add(Activation("relu"))

# softmax classifier

model.add(Flatten())

model.add(Dense(classes))

model.add(Activation("softmax"))

# return the constructed network architecture

return model

在Keras中只要模型是Sequential类,那就是使用了序列模型。后面用model.add的形式加入其他层。

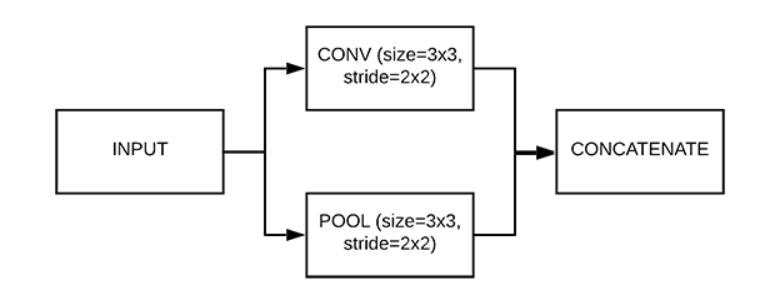

函数模型(Functional API)

使用函数模型有以下优点:

- 可以构建多输入多输出模型

- 可以轻易的构建分支结构(如Inception block, ResNet block)

- 可以轻易共享架构内部的层

通常比较容易就可以用Function API构建的模型(包含层间的分支)有:

- ResNet

- GoogLeNet/Inception

- Xception

- SqueezeNet

- DenseNet

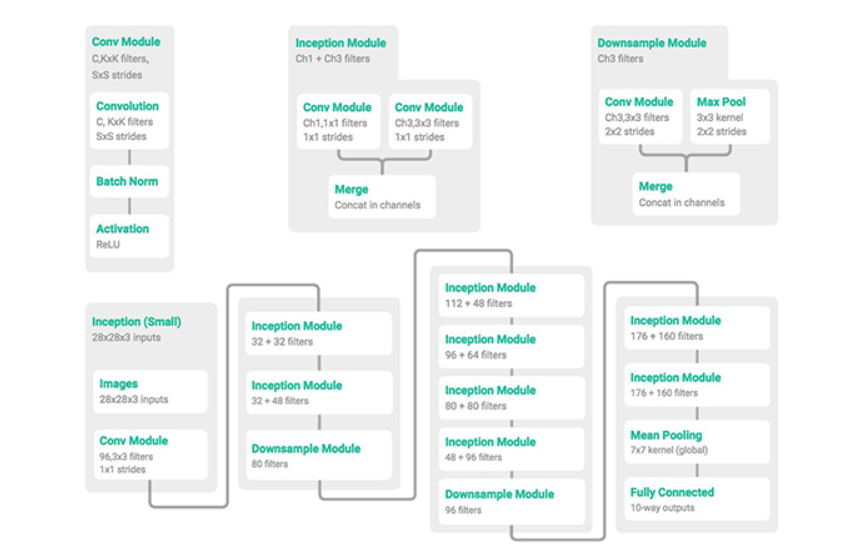

此处看一下原文中的例子MiniGoogLeNet:

看起来很复杂,但是模块化后显得十分清晰,代码如下:

def minigooglenet_functional(width, height, depth, classes):

def conv_module(x, K, kX, kY, stride, chanDim, padding="same"):

# define a CONV => BN => RELU pattern

x = Conv2D(K, (kX, kY), strides=stride, padding=padding)(x)

x = BatchNormalization(axis=chanDim)(x)

x = Activation("relu")(x)

# return the block

return x

def inception_module(x, numK1x1, numK3x3, chanDim):

# define two CONV modules, then concatenate across the

# channel dimension

conv_1x1 = conv_module(x, numK1x1, 1, 1, (1, 1), chanDim)

conv_3x3 = conv_module(x, numK3x3, 3, 3, (1, 1), chanDim)

x = concatenate([conv_1x1, conv_3x3], axis=chanDim)

# return the block

return x

def downsample_module(x, K, chanDim):

# define the CONV module and POOL, then concatenate

# across the channel dimensions

conv_3x3 = conv_module(x, K, 3, 3, (2, 2), chanDim,

padding="valid")

pool = MaxPooling2D((3, 3), strides=(2, 2))(x)

x = concatenate([conv_3x3, pool], axis=chanDim)

# return the block

return x

# initialize the input shape to be "channels last" and the

# channels dimension itself

inputShape = (height, width, depth)

chanDim = -1

# define the model input and first CONV module

inputs = Input(shape=inputShape)

x = conv_module(inputs, 96, 3, 3, (1, 1), chanDim)

# two Inception modules followed by a downsample module

x = inception_module(x, 32, 32, chanDim)

x = inception_module(x, 32, 48, chanDim)

x = downsample_module(x, 80, chanDim)

# four Inception modules followed by a downsample module

x = inception_module(x, 112, 48, chanDim)

x = inception_module(x, 96, 64, chanDim)

x = inception_module(x, 80, 80, chanDim)

x = inception_module(x, 48, 96, chanDim)

x = downsample_module(x, 96, chanDim)

# two Inception modules followed by global POOL and dropout

x = inception_module(x, 176, 160, chanDim)

x = inception_module(x, 176, 160, chanDim)

x = AveragePooling2D((7, 7))(x)

x = Dropout(0.5)(x)

# softmax classifier

x = Flatten()(x)

x = Dense(classes)(x)

x = Activation("softmax")(x)

# create the model

model = Model(inputs, x, name="minigooglenet")

# return the constructed network architecture

return model

结合图片一看,一目了然。

子类化模型(Model subclassing)

这种方式的定义在tensorflow1.x版本中不太常见,不过熟悉pytorch的肯定对这种定义方法烂熟于胸,因为pytorch基本都是根据这个方法构建模型的。即面向对象编程的思想(object-oriented programming)。

实际这种方法要比上面两种麻烦许多,但是还是有优点,这里引用原文解释:

Exotic architectures or custom layer/model implementations, especially those utilized by researchers, can be extremely challenging, if not impossible, to implement using the standard Sequential or Functional APIs.

Instead, researchers wish to have control over every nuance of the network and training process — and that’s exactly what model subclassing provides them.

精髓即是:研究人员可以控制网络和培训过程的每个细微差别。

这里看一下VGGNet模型的表达方法:

class MiniVGGNetModel(Model):

def __init__(self, classes, chanDim=-1):

# call the parent constructor

super(MiniVGGNetModel, self).__init__()

# initialize the layers in the first (CONV => RELU) * 2 => POOL

# layer set

self.conv1A = Conv2D(32, (3, 3), padding="same")

self.act1A = Activation("relu")

self.bn1A = BatchNormalization(axis=chanDim)

self.conv1B = Conv2D(32, (3, 3), padding="same")

self.act1B = Activation("relu")

self.bn1B = BatchNormalization(axis=chanDim)

self.pool1 = MaxPooling2D(pool_size=(2, 2))

# initialize the layers in the second (CONV => RELU) * 2 => POOL

# layer set

self.conv2A = Conv2D(32, (3, 3), padding="same")

self.act2A = Activation("relu")

self.bn2A = BatchNormalization(axis=chanDim)

self.conv2B = Conv2D(32, (3, 3), padding="same")

self.act2B = Activation("relu")

self.bn2B = BatchNormalization(axis=chanDim)

self.pool2 = MaxPooling2D(pool_size=(2, 2))

# initialize the layers in our fully-connected layer set

self.flatten = Flatten()

self.dense3 = Dense(512)

self.act3 = Activation("relu")

self.bn3 = BatchNormalization()

self.do3 = Dropout(0.5)

# initialize the layers in the softmax classifier layer set

self.dense4 = Dense(classes)

self.softmax = Activation("softmax")

def call(self, inputs):

# build the first (CONV => RELU) * 2 => POOL layer set

x = self.conv1A(inputs)

x = self.act1A(x)

x = self.bn1A(x)

x = self.conv1B(x)

x = self.act1B(x)

x = self.bn1B(x)

x = self.pool1(x)

# build the second (CONV => RELU) * 2 => POOL layer set

x = self.conv2A(x)

x = self.act2A(x)

x = self.bn2A(x)

x = self.conv2B(x)

x = self.act2B(x)

x = self.bn2B(x)

x = self.pool2(x)

# build our FC layer set

x = self.flatten(x)

x = self.dense3(x)

x = self.act3(x)

x = self.bn3(x)

x = self.do3(x)

# build the softmax classifier

x = self.dense4(x)

x = self.softmax(x)

# return the constructed model

return x

可能很难理解为什么要这样,我也说不明白,这就是面向对象编程的一种思想,可以说是换了一种看待问题的方式。虽然它本质上是可以序列模型,但是仍然可以实现复杂的旁路结构,和多输入多输出的情况。

以后我对这块有了更深入的思考会加上的(挖坑)🤣。

以上就是3种用TensorFlow 2.0构建Keras模型的方法,可以说是各有千秋,但是刚接触的人可能就会疑惑为什么构建模型的形式千奇百怪,一会官方文档用的是序列模型,某某教程用的又是函数模型甚至子类化模型。希望看了这个后,能对大家有所帮助。也更能进一步思考。

参考: